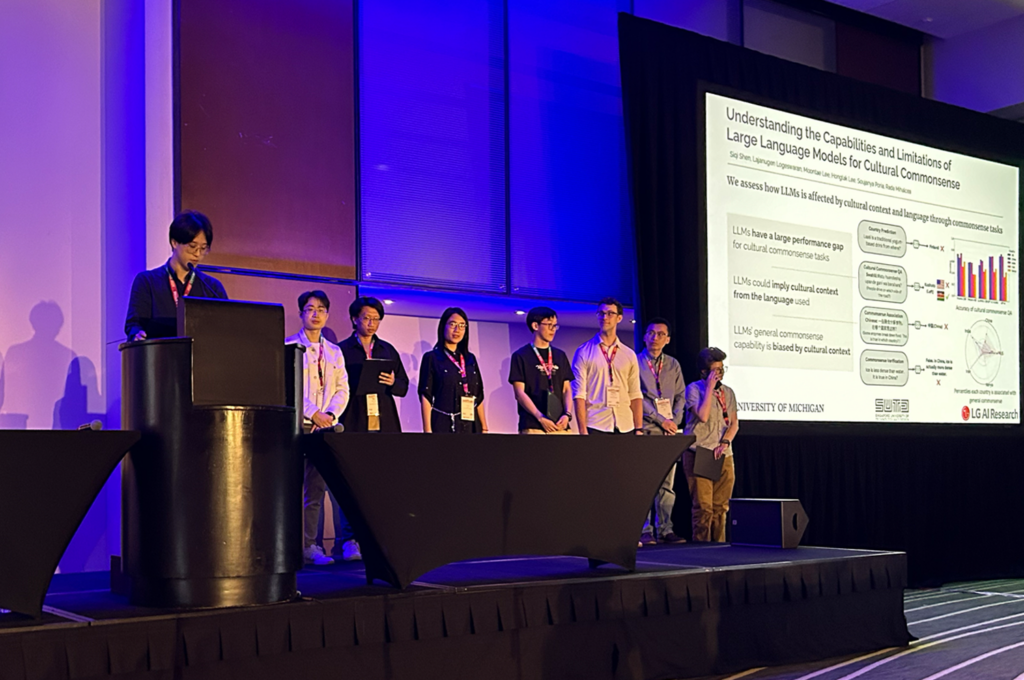

CSE researchers receive Social Impact Award at NAACL 2024

CSE researchers, led by PhD student Siqi Shen along with Janice M. Jenkins Collegiate Professor of Computer Science and Engineering Rada Mihalcea, have won the Social Impact Award at the 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL) for their work’s significance in supporting fair and responsible AI. Their paper, “Understanding the Capabilities and Limitations of Large Language Models for Cultural Commonsense,” explores LLMs’ understanding of culture-specific common sense and its impact on AI cultural bias. The work was done in collaboration with Prof. Honglak Lee of CSE, Lajanugen Logeswaran and Moontae Lee from LG AI, and Soujanya Poria from Singapore University of Technology and Design.

The NAACL is a leading conference in computational linguistics and natural language processing, bringing together top researchers from across the world to share the latest findings in these areas. The Social Impact Award is given to just one paper among the 800+ presented at the conference and recognizes research that has a significant and positive influence on society.

“This paper tackles a crucial and timely issue regarding cultural biases in LLMs,” read a statement from the NAACL award committee. “By exposing cultural biases inherent in LLMs, the paper underscores the urgent need for developing culturally-aware language models to mitigate societal biases and foster inclusivity in AI technologies.”

Common sense, an underpinning of human reasoning and cognition, refers to fundamental understandings that are widely agreed upon and shared across most people, such as lemons being sour or each person having one biological mother.

“Commonsense knowledge is fundamental to our existence in the world,” said Shen. “Shared understandings help us make sense of everyday situations and form a foundation for several other cognitive functions like reasoning and solving problems.”

While many of these understandings are globally shared, norms and knowledge can also vary by culture, referred to as cultural common sense. To give an example, while white wedding dresses might be the norm in Western countries like the U.S., they are typically red in many Asian cultures. While cars drive on the right side of the road in much of the world, they drive on the left in Japan, the UK, and other countries.

It might be relatively easy for an LLM to learn what is considered universal common sense, but when beliefs and norms vary by culture, can these models adapt according to context? Considerable research in natural language processing has explored and demonstrated LLMs’ ability to grasp and replicate general common sense, but few studies have evaluated their performance on culture-specific tasks.

“Cultural common sense is based on traditions that are often unspoken and unwritten, and must be learned over time,” described Shen. “We were curious whether LLMs were able to understand and mirror this type of cultural knowledge as well as more universal, factual knowledge.”

To address this, Shen, Mihalcea, and their coauthors conducted a thorough investigation into the abilities and limitations of several LLMs in understanding cultural common sense. In particular, they tested four LLMs’ performance on culture-specific commonsense tasks, as well as how often an LLM associates general common sense with a specific culture. Their examination focused on five cultures defined by country, namely, China, India, Iran, Kenya, and the U.S.

Their testing revealed that LLMs demonstrate significant discrepancies in their understanding of cultural common sense across different cultures. That is, LLMs demonstrate better understanding of larger countries’ cultures, while consistently performing worse on tasks related to Iran and Kenya.

The researchers also found that LLMs tend to associate general common sense with more dominant cultures, attributing commonsense statements to the U.S. almost seven times more often than to Kenya. Their results further showed that prompting LLMs in languages other than English resulted in an overall decrease of up to 20% in accuracy, with no improvement in cultural understanding.

These findings highlight a troubling pattern in LLM development. While these models can reliably complete tasks that relate to dominant cultures, their performance with respect to less-represented cultures leaves much to be desired, resulting in the potential for cultural misunderstanding and bias.

“This knowledge gap reflects a persistent trend of cultural bias among LLMs,” said Mihalcea. “We hope these findings will help the field think more critically about how we can make these models more culturally aware and inclusive going forward.”

MENU

MENU