Hardware model checker takes gold at international competition

The system automatically proves the trustworthiness of a hardware design, outperforming the competition in nearly every category.

Enlarge

Enlarge

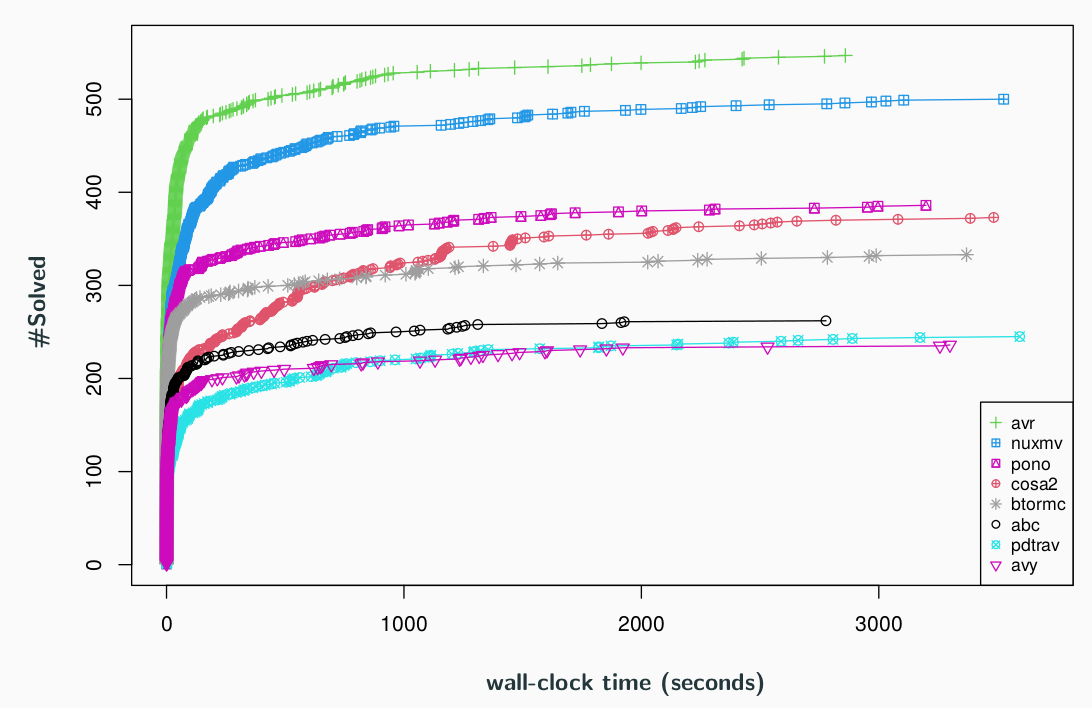

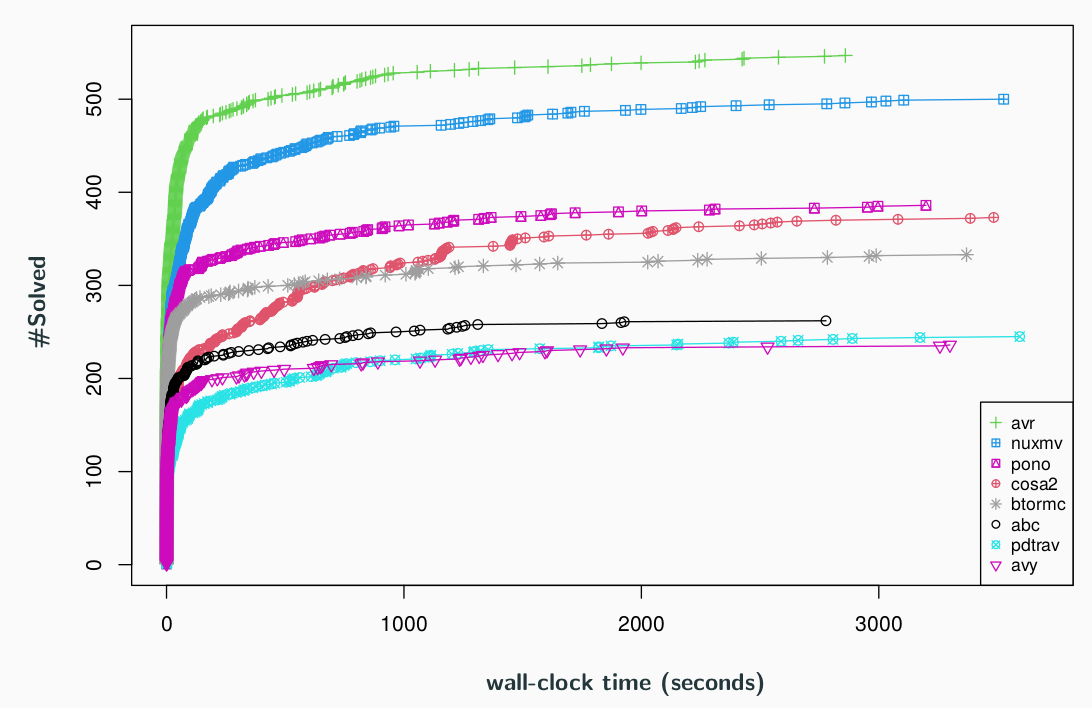

Work by Prof. Karem Sakallah and PhD student Aman Goel took first place at the 11th edition of the Hardware Model Checking Competition (HWMCC), an event hosted by the Conference on Formal Methods in Computer-Aided Design to determine the best current method for formally verifying a hardware system. The event featured nine categories composed of a total of 639 benchmarks; the pair earned gold medals in 7 categories and a silver and bronze in the remaining two, solving the most number of benchmarks including 23 of which were not solved by any other verifier.

Hardware design specifications like those tested at HWMCC are the logical descriptions of a proposed processor, including how the logical components are arrayed on the chip and how data “moves” between them. Researchers like Sakallah, Goel, and other competitors aim to prove that these designs are free of bugs and security flaws by reasoning about them as mathematical formulas. This line of work is called formal verification, and can be used to safeguard against bugs in both hardware and software.

Enlarge

Enlarge

Competitors at HWMCC make use of a technique called model checking to verify the designs, which treats the hardware as a series of states. Each state is a certain configuration, accounting for the current position and flow of data through its different logical components. Some of these states are “bad states” – they lead to an error, like an overflow, and it’s the goal of formal verification to prove that these are being avoided. A processor design gets the OK if all possible states can’t lead to a bad state regardless of what input they’re given.

“When we’re talking about this complex system like a software or hardware program, it’s called a state transition system,” Sakallah explains. “It fits in different configurations and moves from one configuration to the next based on some interaction with the environment or input.”

In the past two years, the competition has shifted paradigms from what’s called the bit-level to word-level design specifications. Words, in computing, are the largest unit of data a particular processor can work with as a package. This size limit, commonly either 32 or 64 bits in modern machines, dictates the largest piece of data that can be moved in and out of working memory in a single operation, as well as the largest possible address size to designate a location in memory. Previously, the competition focused on models that accounted for the flow and logic of every bit and each single-bit operator on the chip.

Sakallah and Goel were ready for this shift — their tool was built on efforts by Sakallah to move to word-level verification dating back as early as 2009.

“This bit-level verification has been hot for the past 10-15 years, but we reason that it is not going to scale,” Sakallah says. As processor complexity grows, the number of bits being used in simultaneous operations across each chip will also continue to grow, making methods that rely on this level of analysis resource-intensive on the larger designs. By considering entire words of data instead of individual bits, the problem is simplified and far fewer components need to be considered at a granular level. In the end, the processor’s total logical flow from input to output is still accounted for. “We asked if we could raise the level of reasoning and in some cases throw out the bit level completely.”

Working at the word level means assuming that the various single-purpose circuits on the chip, which include simple things like adders, are correct. Sakallah and Goel treat these components like functions in software code, whose correctness can be proven separately.

“We say most of the bugs actually lurk in the very complex control interactions of the system,” says Sakallah. “Let’s focus on those and ignore data path operations — we’re not going to check that the adder unit is correct — and under that assumption check that the rest of the logic is correct.”

“The beauty of all of this is it focuses the analysis on the control logic, which is where these subtle bugs exist.”

The model checker they designed, called AVR (Abstractly Verifying Reachability), was the subject of multiple papers throughout its development. It automatically verifies a hardware description through a combination of abstraction techniques and a common bit-level model checking algorithm called IC3 that they re-designed at the word level. If the system being verified is found to be incorrect, it provides the user with a counterexample trace of one possible bad execution. If the system is found to be correct, it provides a safety certificate in the form of a state formula logically demonstrating its correctness.

The benchmarks it was tested on at HWMCC included a mix of designs that were already verified to be correct and others with known bugs.

MENU

MENU