Lie-detecting software uses real court case data

U-M researchers are building a unique lie-detecting software that works from studying real world data from real, high-stakes court cases.

By studying videos from high-stakes court cases, University of Michigan researchers are building unique lie-detecting software based on real-world data.

Their prototype considers both the speaker’s words and gestures, and unlike a polygraph, it doesn’t need to touch the subject in order to work. In experiments, it was up to 75 percent accurate in identifying who was being deceptive (as defined by trial outcomes), compared with humans’ scores of just above 50 percent.

Enlarge

Enlarge

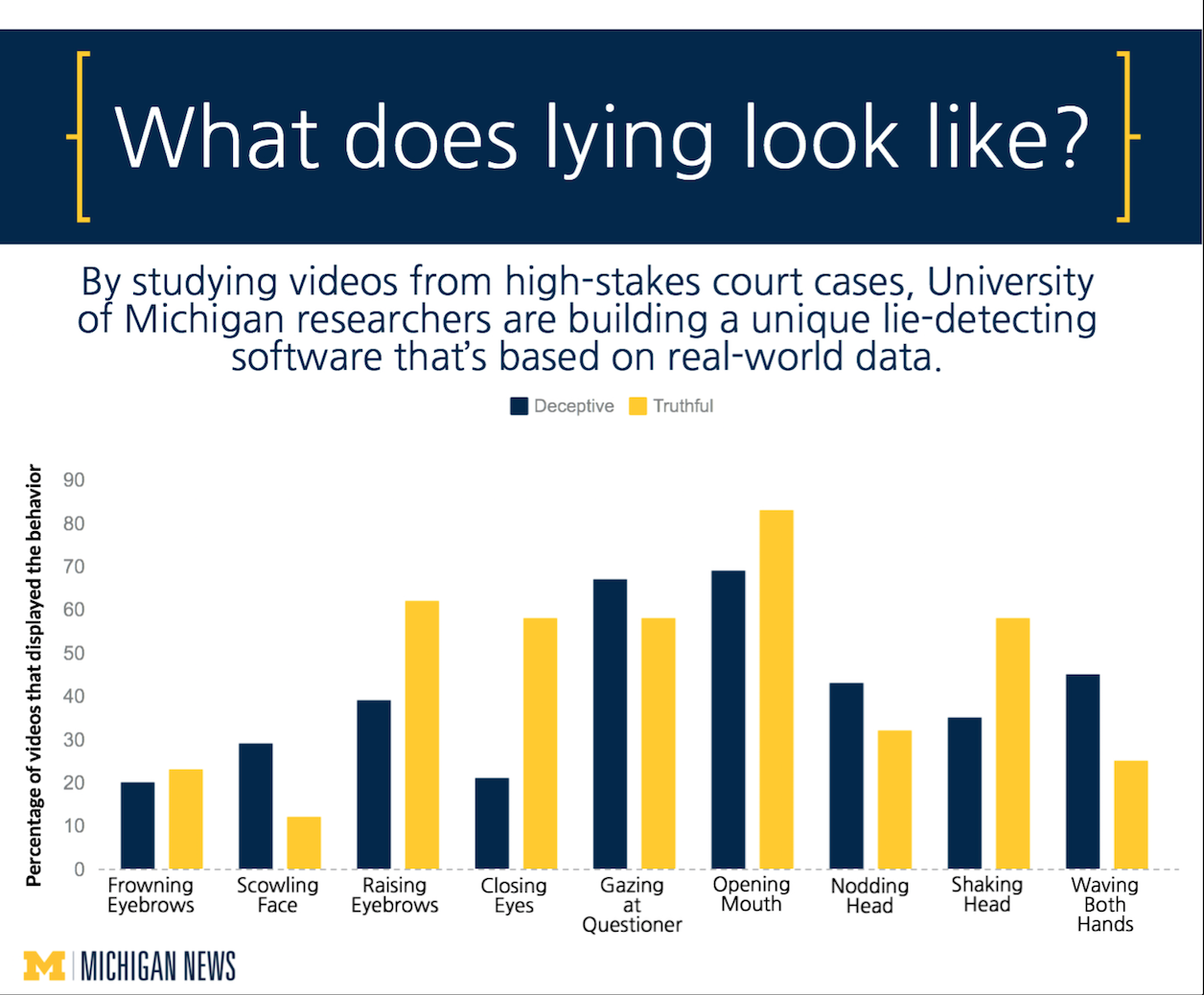

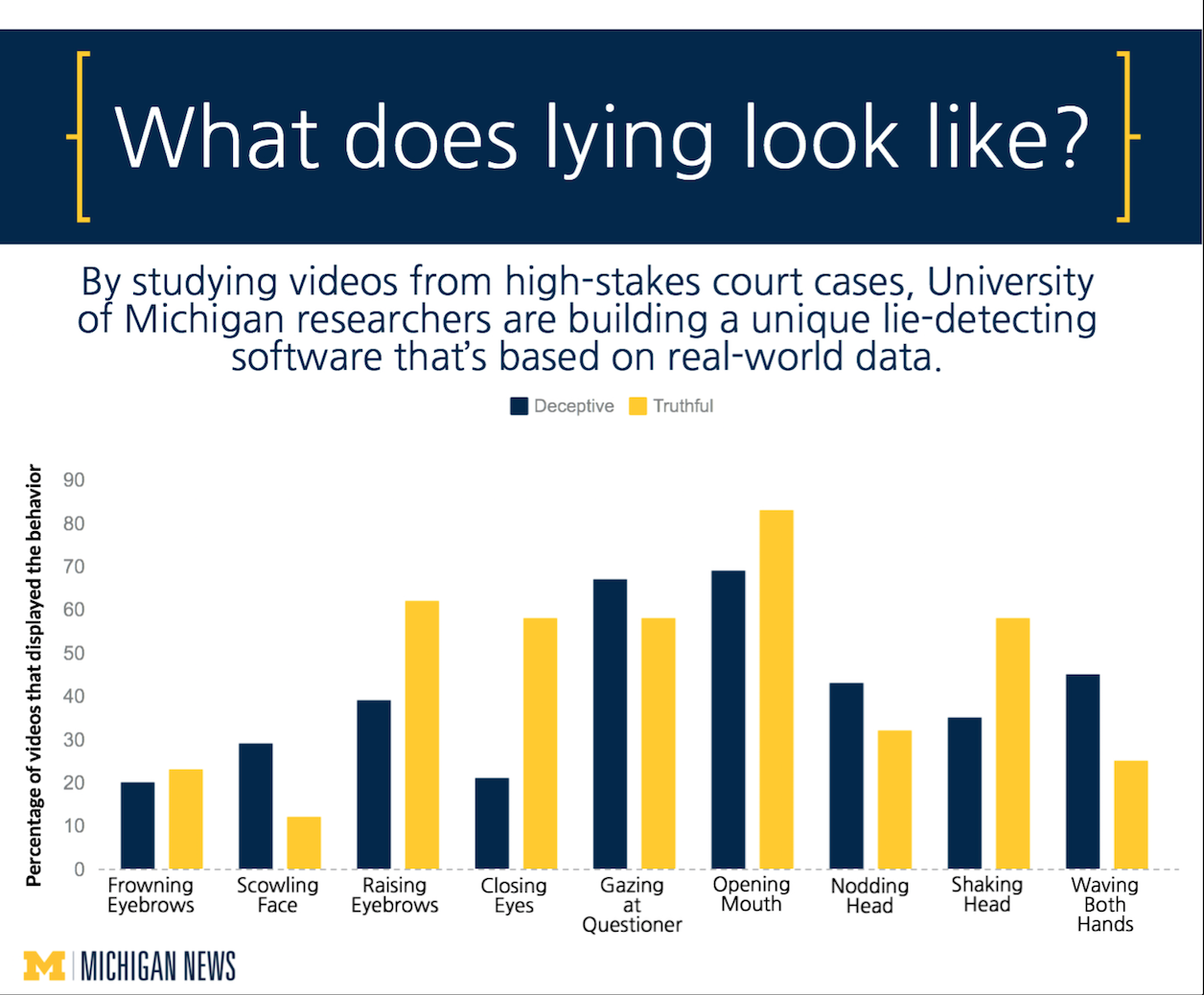

With the software, the researchers say they’ve identified several tells. Lying individuals moved their hands more. They tried to sound more certain. And, somewhat counterintuitively, they looked their questioners in the eye a bit more often than those presumed to be telling the truth, among other behaviors.

The system might one day be a helpful tool for security agents, juries and even mental health professionals, the researchers say.

To develop the software, the team used machine-learning techniques to train it on a set of 120 video clips from media coverage of actual trials. They got some of their clips from the website of The Innocence Project, a national organization that works to exonerate the wrongfully convicted.

The “real world” aspect of the work is one of the main ways it’s different.

“In laboratory experiments, it’s difficult to create a setting that motivates people to truly lie. The stakes are not high enough,” said Rada Mihalcea, professor of computer science and engineering who leads the project with Mihai Burzo, assistant professor of mechanical engineering at UM-Flint. “We can offer a reward if people can lie well—pay them to convince another person that something false is true. But in the real world there is true motivation to deceive.”

The videos include testimony from both defendants and witnesses. In half of the clips, the subject is deemed to be lying. To determine who was telling the truth, the researchers compared their testimony with trial verdicts.

Enlarge

Enlarge

To conduct the study, the team transcribed the audio, including vocal fill such as “um, ah, and uh.” They then analyzed how often subjects used various words or categories of words. They also counted the gestures in the videos using a standard coding scheme for interpersonal interactions that scores nine different motions of the head, eyes, brow, mouth and hands.

The researchers fed the data into their system and let it sort the videos. When it used input from both the speaker’s words and gestures, it was 75 percent accurate in identifying who was lying. That’s much better than humans, who did just better than a coin-flip.

“People are poor lie detectors,” Mihalcea said. “This isn’t the kind of task we’re naturally good at. There are clues that humans give naturally when they are being deceptive, but we’re not paying close enough attention to pick them up. We’re not counting how many times a person says ‘I’ or looks up. We’re focusing on a higher level of communication.”

In the clips of people lying, the researchers found common behaviors:

- Scowling or grimacing of the whole face. This was in 30 percent of lying videos vs. 10 percent of truthful ones.

- Looking directly at the questioner—in 70 percent of deceptive clips vs. 60 percent of truthful.

- Gesturing with both hands—in 40 percent of lying clips, compared with 25 percent of the truthful.

- Speaking with more vocal fill such as “um.” This was more common during deception.

- Distancing themselves from the action with words such as “he” or “she,” rather than “I” or “we,” and using phrases that reflected certainty.

This effort is one piece of a larger project.

“We are integrating physiological parameters such as heart rate, respiration rate and body temperature fluctuations, all gathered with non-invasive thermal imaging,” Burzo said.

Enlarge

Enlarge

The research team also includes research fellows Veronica Perez-Rosas and Mohamed Abouelenien. A paper on the findings titled “Deception Detection using Real-life Trial Data” was presented at the International Conference on Multimodal Interaction and is published in the 2015 conference proceedings. The work was funded by the National Science Foundation, John Templeton Foundation and Defense Advanced Research Projects Agency.

Ensuring the security of our society is a top priority for the U-M College of Engineering’s transformational campaign currently underway.

MENU

MENU