New student tool gets chips from lab to fab faster than ever

The open-source system cuts a key step in chip testing down from days or weeks to a couple hours, on average.

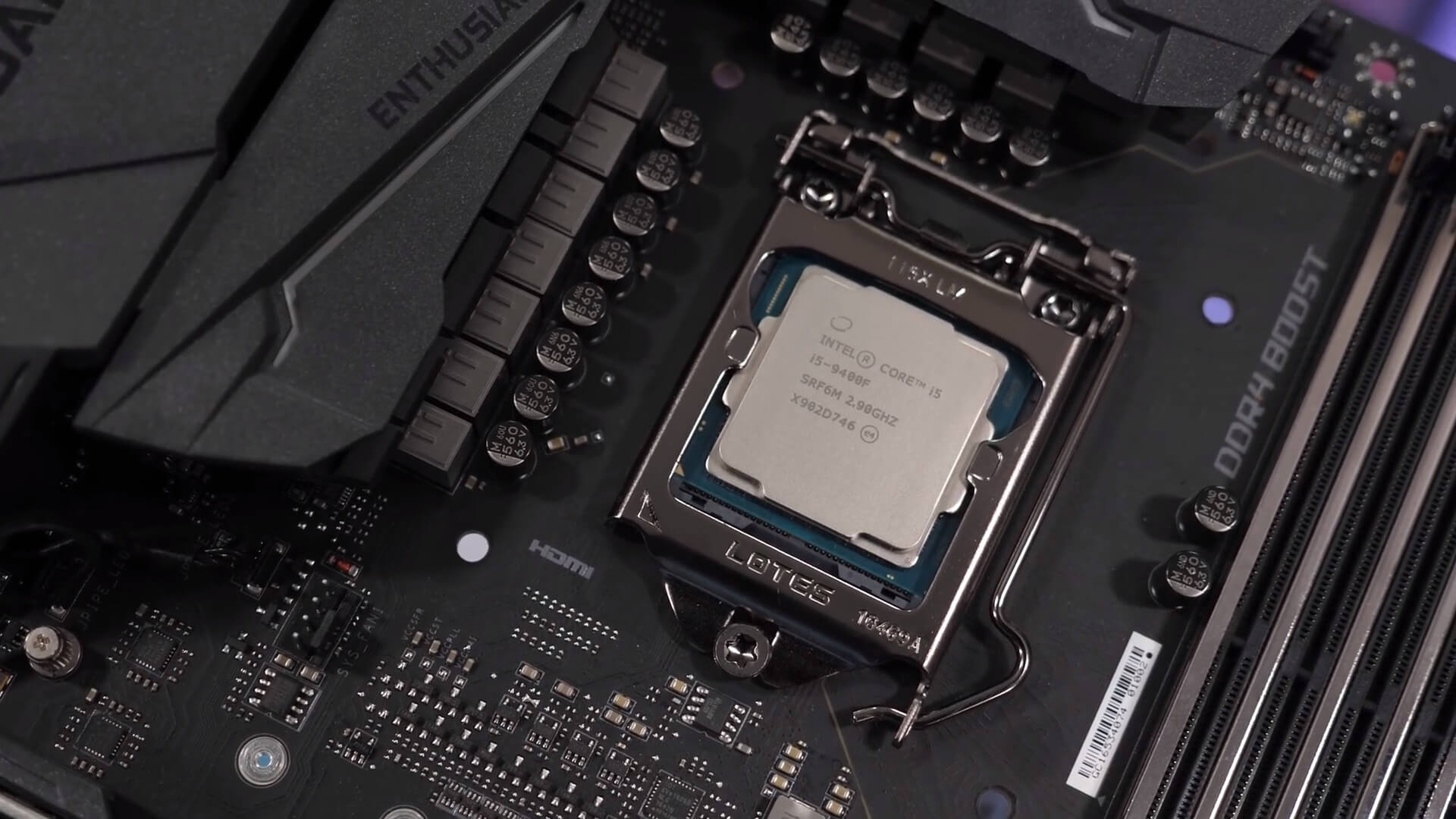

Enlarge

Enlarge

Like all design in computer science, the most tedious part of building new hardware is the testing. With a pipeline to realization that crosses into the world of physical production, chip designers have come to use a number of software testing tools to ease these difficulties. Running hardware benchmarks in a simulator saves time and costs in what would otherwise be an extremely laborious test cycle involving multiple iterations of chip fabrication. The use of standard tools like gem5 allows for fast prototyping and testing of new CPU features without the need to constantly order in silicon.

Unfortunately, that’s about where the convenience ends; running a set of instructions on simulated hardware can take up to six orders of magnitude longer than on a real-world chip.

“It makes sense that simulating a complex piece of hardware in software is quite slow,” writes CSE PhD student Ian Neal in a blog post. “Not too much of a problem for small tests, which could just be simulated overnight.”

But working with a team of students and Profs. Thomas Wenisch and Baris Kasikci on entirely new microarchitectures meant Neal’s tests were anything but small. As an anecdote for what they were up against, Wenisch told the group it took him several weeks to run experiments for his own PhD work using the same standard set of CPU benchmarks they were now intent on running.

To make the whole process more manageable, Neal built an open-source tool that changes how microarchitectural simulations are done. During the course of the project he designed Lapidary, which saves designers time by running smaller snippets of instructions from the larger block of code and finding the likely average performance with a high degree of accuracy.

“It is a much better way to evaluate hardware on simulators than the traditional method,” says Kevin Loughlin, a CSE PhD student collaborating with Neal. “We actually think it has potential to leave a significant impact on the way people do evaluations in architecture.”

Existing attempts to speed up simulation time involve running random samplings of simulation checkpoints on the simulated test hardware rather than the entire block of benchmark code. This cuts down the wait for repeated and iterative tests substantially. The catch is that setting up these checkpoints requires a full simulated run of the program on a CPU setup that’s simpler than the final test. Ultimately, at least one simulation that can take days or weeks is still necessary.

“We saw a problem with the current workflow of using gem5; not in the simulation itself, but in how programs where prepared to be simulated,” Neal writes. “If you need to use more than 1 or 2 small programs, the setup cost alone becomes intractable.”

The goal of Lapidary was to speed up the process of creating these checkpoints, finally cutting down the runtime of every step of the test.

What Neal realized is that the data contained in a checkpoint is essentially just a dump of the state of the program at that moment – things like the simulation’s configuration settings, CPU register contents, and virtual memory space. These are all things you can pull from a program when it’s running directly on hardware with a debugger. And so, Neal did just that.

Lapidary works by running a program and dumping its register and core information at a fixed interval, using each to create gem5 checkpoints, and then running these on the simulated hardware rather than the full original program. It also enables gem5 to run several times in parallel so that multiple checkpoints can be run simultaneously.

By creating checkpoints this way rather than on a full simulated run, Neal was able to cut this step of the process down from days or weeks to a couple hours, on average.

These shorter simulations (millions of instructions rather than billions) run over many checkpoints (several hundred for Neal’s projects) represent a large enough portion of the benchmark program to produce statistically significant performance measurements.

Neal and collaborators plan to expand the functionality of Lapidary’s open-source release, now that the “research quality” version has been used successfully on three of the students’ projects. Some of their intended next steps are integration with cloud services, compressing the currently very large checkpoint files, and enabling support for custom instructions.

To learn more about the tool, read Neal’s blog post or see the Lapidary Git repository.

To learn more about the project that inspired Lapidary, read “Researchers design new solution to widespread side-channel attacks.”

MENU

MENU