11 papers by CSE researchers presented at CHI 2024

Eleven papers by researchers affiliated with CSE have been accepted for presentation at the 2024 ACM Conference on Human Factors in Computing Systems, commonly called CHI, taking place May 11-16 in Honolulu, Hawai’i. Two of these 11 papers received the honorable mention designation, an honor awarded to just 150 papers out of more than 4,000 total submissions.

CHI serves as the premier international platform for researchers and practitioners in human-computer interaction (HCI) to come together and share the latest advances in the field. New research by CSE faculty and students covers a range of topics in HCI, from combating smartphone overuse and balancing privacy and personalization, to developing human-AI sound awareness systems and using AI to enhance video-based surgical lessons.

The following papers were presented at the conference. Names of CSE researchers appear in bold, including School of Information faculty with courtesy appointments in CSE.

Main Track

Tao Lu, Hongxiao Zheng, Tianying Zhang, Xuhai Xu, Anhong Guo

Abstract: Smartphone overuse poses risks to people’s physical and mental health. However, current intervention techniques mainly focus on explicitly changing screen content (i.e., output) and often fail to persistently reduce smartphone overuse due to being over-restrictive or over-flexible. We present the design and implementation of InteractOut, a suite of implicit input manipulation techniques that leverage interaction proxies to weakly inhibit the natural execution of common user gestures on mobile devices. We present a design space for input manipulations and demonstrate 8 Android implementations of input interventions. We first conducted a pilot lab study (N=30) to evaluate the usability of these interventions. Based on the results, we then performed a 5-week within-subject field experiment (N=42) to evaluate InteractOut in real-world scenarios. Compared to the traditional and common timed lockout technique, InteractOut significantly reduced the usage time by an additional 15.6% and opening frequency by 16.5% on participant-selected target apps. InteractOut also achieved a 25.3% higher user acceptance rate, and resulted in less frustration and better user experience according to participants’ subjective feedback. InteractOut demonstrates a new direction for smartphone overuse intervention and serves as a strong complementary set of techniques with existing methods.

Jingying Wang, Haoran Tang, Taylor Kantor, Tandis Soltani, Vitaliy Popov, Xu Wang

Abstract: Videos are prominent learning materials to prepare surgical trainees before they enter the operating room (OR). In this work, we explore techniques to enrich the video-based surgery learning experience. We propose Surgment, a system that helps expert surgeons create exercises with feedback based on surgery recordings. Surgment is powered by a few-shot-learning-based pipeline (SegGPT+SAM) to segment surgery scenes, achieving an accuracy of 92%. The segmentation pipeline enables functionalities to create visual questions and feedback desired by surgeons from a formative study. Surgment enables surgeons to 1) retrieve frames of interest through sketches, and 2) design exercises that target specific anatomical components and offer visual feedback. In an evaluation study with 11 surgeons, participants applauded the search-by-sketch approach for identifying frames of interest and found the resulting image-based questions and feedback to be of high educational value.

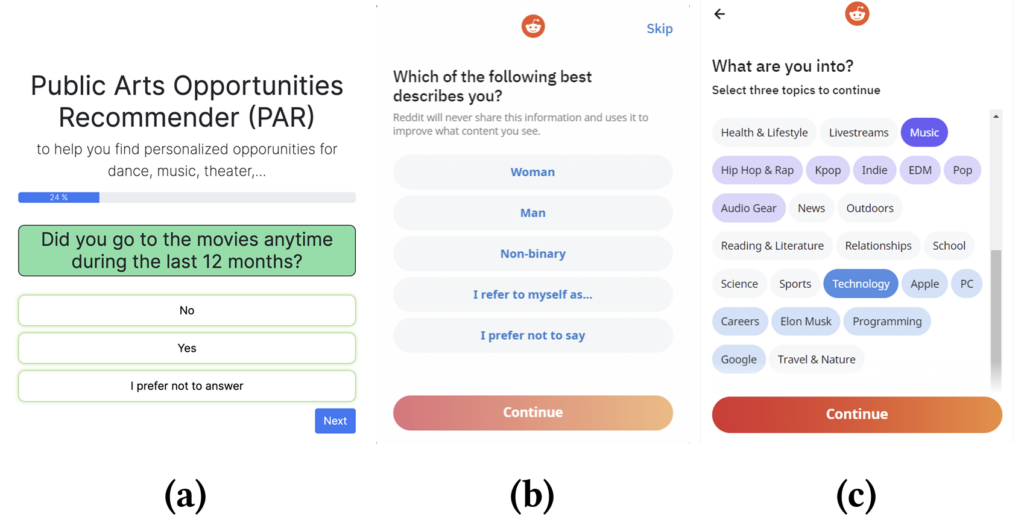

Sumit Asthana, Jane Im, Zhe Chen, Nikola Banovic

Abstract: Personalization improves user experience by tailoring interactions relevant to each user’s background and preferences. However, personalization requires information about users that platforms often collect without their awareness or their enthusiastic consent. Here, we study how the transparency of AI inferences on users’ personal data affects their privacy decisions and sentiments when sharing data for personalization. We conducted two experiments where participants (N=877) answered questions about themselves for personalized public arts recommendations. Participants indicated their consent to let the system use their inferred data and explicitly provided data after awareness of inferences. Our results show that participants chose restrictive consent decisions for sensitive and incorrect inferences about them and for their answers that led to such inferences. Our findings expand existing privacy discourse to inferences and inform future directions for shaping existing consent mechanisms in light of increasingly pervasive AI inferences.

“Looking Together ≠ Seeing the Same Thing: Understanding Surgeons’ Visual Needs During Intra-operative Coordination and Instruction” – Honorable Mention

Vitaliy Popov, Xinyue Chen, Jingying Wang, Michael Kemp, Gurjit Sandhu, Taylor Kantor, Natalie Mateju, Xu Wang

Abstract: Shared gaze visualizations have been found to enhance collaboration and communication outcomes in diverse HCI scenarios including computer supported collaborative work and learning contexts. Given the importance of gaze in surgery operations, especially when a surgeon trainer and trainee need to coordinate their actions, research on the use of gaze to facilitate intra-operative coordination and instruction has been limited and shows mixed implications. We performed a field observation of 8 surgeries and an interview study with 14 surgeons to understand their visual needs during operations, informing ways to leverage and augment gaze to enhance intra-operative coordination and instruction. We found that trainees have varying needs in receiving visual guidance which are often unfulfilled by the trainers’ instructions. It is critical for surgeons to control the timing of the gaze-based visualizations and effectively interpret gaze data. We suggest overlay technologies, e.g., gaze-based summaries and depth sensing, to augment raw gaze in support of surgical coordination and instruction.

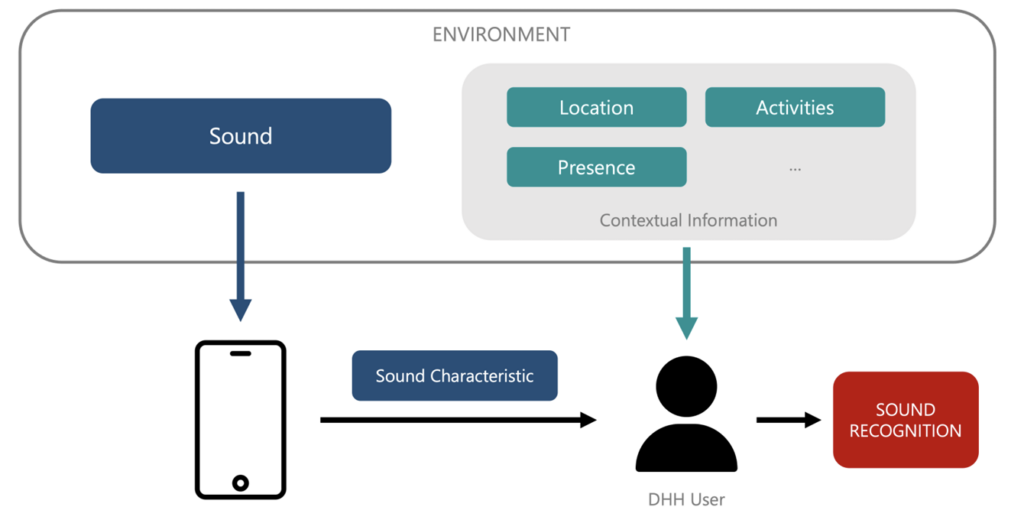

“Show, Not Tell: A Human-AI Collaborative Approach for Designing Sound Awareness Systems”

Jeremy Zhengqi Huang, Reyna Wood, Hriday Chhabria, Dhruv Jain

Abstract: Current sound recognition systems for deaf and hard of hearing (DHH) people identify sound sources or discrete events. However, these systems do not distinguish similar sounding events (e.g., a patient monitor beep vs. a microwave beep). In this paper, we introduce HACS, a novel futuristic approach to designing human-AI sound awareness systems. HACS assigns AI models to identify sounds based on their characteristics (e.g., a beep) and prompts DHH users to use this information and their contextual knowledge (e.g., “I am in a kitchen”) to recognize sound events (e.g., a microwave). As a first step for implementing HACS, we articulated a sound taxonomy that classifies sounds based on sound characteristics using insights from a multi-phased research process with people of mixed hearing abilities. We then performed a qualitative (with 9 DHH people) and a quantitative (with a sound recognition model) evaluation. Findings demonstrate the initial promise of HACS for designing accurate and reliable human-AI systems.

“Authors’ Values and Attitudes Towards AI-bridged Scalable Personalization of Creative Language Arts” – Honorable Mention

Taewook Kim, Hyomin Han, Eytan Adar, Matthew Kay, John Joon Young Chung

Abstract: Generative AI has the potential to create a new form of interactive media: AI-bridged creative language arts (CLA), which bridge the author and audience by personalizing the author’s vision to the audience’s context and taste at scale. However, it is unclear what the authors’ values and attitudes would be regarding AI-bridged CLA. To identify these values and attitudes, we conducted an interview study with 18 authors across eight genres (e.g., poetry, comics) by presenting speculative but realistic AI-bridged CLA scenarios. We identified three benefits derived from the dynamics between author, artifact, and audience: those that 1) authors get from the process, 2) audiences get from the artifact, and 3) authors get from the audience. We found how AI-bridged CLA would either promote or reduce these benefits, along with authors’ concerns. We hope our investigation hints at how AI can provide intriguing experiences to CLA audiences while promoting authors’ values.

“Shared Responsibility in Collaborative Tracking for Children with Type 1 Diabetes and their Parents”

Yoon Jeong Cha, Yasemin Gunal, Alice Wou, Joyce Lee, Mark Newman, Sun Young Park

Abstract: Efficient Type 1 Diabetes (T1D) management necessitates comprehensive tracking of various factors that influence blood sugar levels. However, tracking health data for children with T1D poses unique challenges, as it requires the active involvement of both children and their parents. This study aims to uncover the benefits, challenges, and strategies associated with collaborative tracking for children (ages 6-12) with T1D and their parents. Over a three-week data collection probe study with 22 child-parent pairs, we found that collaborative tracking, characterized by the shared responsibility of tracking management and data provision, yielded positive outcomes for both children and their parents. Drawing from these findings, we delineate four distinct tracking approaches: child-independent, child-led, parent-led, and parent-independent. Our study offers insights for designing health technologies that empower both children and parents in learning and encourage the sharing of different perspectives through collaborative tracking.

“Understanding the Effect of Reflective Iteration on Individuals’ Physical Activity Planning”

Kefan Xu, Xinghui (Erica) Yan, Myeonghan Ryu, Mark Newman, Rosa Arriaga

Abstract: Many people do not get enough physical activity. Establishing routines to incorporate physical activity into people’s daily lives is known to be effective, but many people struggle to establish and maintain routines when facing disruptions. In this paper, we build on prior self-experimentation work to assist people in establishing or improving physical activity routines using a framework we call “reflective iteration.” This framework encourages individuals to articulate, reflect upon, and iterate on high-level “strategies” that inform their day-to-day physical activity plans. We designed and deployed a mobile application, Planneregy, that implements this framework. Sixteen U.S. college students used the Planneregy app for 42 days to reflectively iterate on their weekly physical exercise routines. Based on an analysis of usage data and interviews, we found that the reflective iteration approach has the potential to help people find and maintain effective physical activity routines, even in the face of life changes and temporary disruptions.

Maulishree Pandey, Steve Oney, Andrew Begel

Abstract: Code readability is crucial for program comprehension, maintenance, and collaboration. However, many of the standards for writing readable code are derived from sighted developers’ readability needs. We conducted a qualitative study with 16 blind and visually impaired (BVI) developers to better understand their readability preferences for common code formatting rules such as identifier naming conventions, line length, and the use of indentation. Our findings reveal how BVI developers’ preferences contrast with those of sighted developers and how we can expand the existing rules to improve code readability on screen readers. Based on the findings, we contribute an inclusive understanding of code readability and derive implications for programming languages, development environments, and style guides. Our work helps broaden the meaning of readable code in software engineering and accessibility research.

Benjamin Berens, Florian Schaub, Mattia Mossano, Melanie Volkamer

Abstract: Two popular approaches for helping consumers avoid phishing threats are phishing awareness videos and tools supporting users in identifying phishing emails. Awareness videos and tools have each been shown on their own to increase people’s phishing detection rate. Videos have been shown to be a particularly effective awareness measure; link-centric warnings have been shown to provide effective tool support. However, it is unclear how these two approaches compare to each other. We conducted a between-subjects online experiment (n=409) in which we compared the effectiveness of the NoPhish video and the TORPEDO tool and their combination. Our main findings suggest that the TORPEDO tool outperformed the NoPhish video and that the combination of both performs significantly better than just the tool. We discuss the implications of our findings for the design and deployment of phishing awareness measures and support tools.

alt.chi

“Hexing Twitter: Channeling Ancient Magic to Bind Mechanisms of Extraction”

Nel Escher, Nikola Banovic

Abstract: Imagining different futures contests the hegemony of surveillance capitalism. Yet, strong forces naturalize existing platforms and their extractive practices. We set out to challenge dominant scripts, such as the “addiction” model for social media overuse, which pathologizes users as afflicted with disordered habits that require reform. We take inspiration from the subversive potential of magic, long used by marginalized people for transforming relationships and generating new realities. We present a technical intervention that curses the Twitter platform by invoking the Homeric story of Tithonus—a prince who was granted eternal life but not eternal youth. Our design probe takes form in a browser extension that sabotages a mechanism of extraction; it impairs the infinite scroll functionality by progressively rotting away content as it loads. By illustrating the enduring ability of magic to contest current conditions, we contribute to a broader project of everyday resistance against the extractive logics of surveillance capitalism.

MENU

MENU