New technique for memory page placement integrated into Linux kernel

An increasingly data-driven world means that today’s data centers are home to extremely large volumes of information and must process enormous workloads. With these hyperscale applications comes an ever-expanding demand for memory, making this an increasingly large component of data center power consumption and overall costs. Given the massive size and scale of these data centers, even incremental improvements in memory speed can translate to billions of dollars in savings.

Addressing this challenge is a memory-saving technique designed by a team of University of Michigan researchers, including computer science and engineering PhD student Hasan Al Maruf and Professor Mosharaf Chowdhury. Their technique, reported in their paper TPP: Transparent Page Placement for CXL-Enabled Tiered-Memory and presented at the 2023 Architectural Support for Programming Languages and Operating Systems (ASPLOS) conference, has recently been integrated into the Linux kernel. This means that their page placement mechanism is being deployed by hyperscale data centers across the world, where Linux is the dominant operating system.

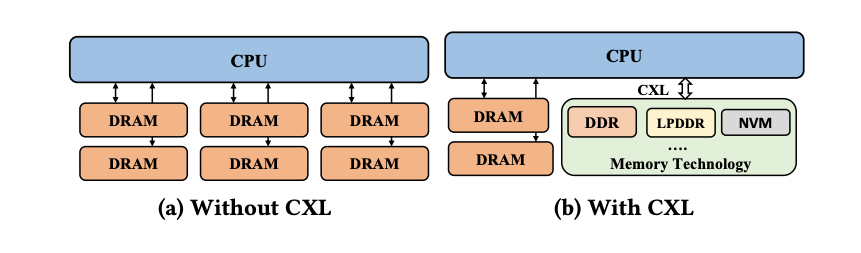

Because of growing memory demands, more and more data centers are disaggregating their storage by decoupling their memory from their central processing units (CPUs). A new technology called compute express link (CXL) has opened up new opportunities for disaggregation by separating memory from data center CPUs while enabling efficient communication between them.

“CXL decouples memory from the CPU, so you can put any type of memory technology at the other end,” says Maruf. “Even if you are using a new CPU, it will still be compatible with previous generations of memory technologies. This allows data centers to reuse their earlier generation of DIMMs, rather than simply throwing them away.”

The ability to continue using existing memory alongside CPU upgrades via CXL means considerable cost savings. “These data centers don’t need to spend billions of dollars upgrading their memory hardware, and because they can use multiple types of memory, they have the flexibility of building a server that consumes less power,” Maruf says. “As power consumption drives a significant portion of data center expenditures, this minimizes the total capital cost of operations. ”

The challenge with this approach, however, is that decoupling via CXL increases the distance between the CPU and the memory, resulting in more latency, which in turn leads to performance degradation.

“This is where our mechanism comes in,” says Maruf. “We want to use this setup, but we don’t want to sacrifice performance.”

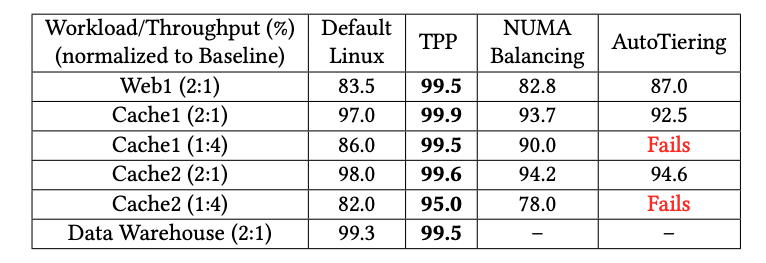

To accomplish this, Maruf and his coauthors have developed an innovative technique called transparent page placement (TPP), which automatically places memory pages into tiers depending on the frequency of their use, which in turn speeds up memory access and boosts overall performance.

“Hot memory is the most frequently accessed, so with our technique, those pages will always reside in the hottest memory tier and will be closest to the CPU,” Maruf explains. “Pages that haven’t been accessed for a considerable amount of time, for instance, are considered cold memory and are placed in a lower tier.”

TPP is based on a lightweight mechanism that automatically locates and places pages in appropriate tiers according to this hot/cold temperature. It proactively demotes colder pages from local CPU memory to CXL memory, and vice versa, freeing up much-needed memory storage and speeding up access to frequently used pages.

With more and more data centers turning to heterogeneous memory systems such as CXL, TPP’s ability to make memory access faster and more efficient has attracted wide-scale attention. Now, with their source code integrated into the Linux kernel, Maruf and his team’s TPP solution is being actively deployed on a broad basis, including at tech giants such as Meta.

“If you download Linux OS, our page placement solution will be in it.” Maruf says. “TPP has become the industry standard.”

MENU

MENU