Students harness generative AI to create accessibility-enhancing software systems

Students in EECS 495: Software for Accessibility celebrated the end of the semester with a showcase of their final projects, an impressive collection of apps and programs designed to improve accessibility for individuals with disabilities.

EECS 495 is taught by Prof. Dave Chesney, whose courses focus on applying computing for the greater good. His Software for Accessibility course gives students the opportunity to learn the ins and outs of software development with real-world impact at the core of their designs. Another element that Chesney encouraged his students to consider this semester was using generative AI where applicable.

“With ChatGPT and other forms of generative AI in the public consciousness, much of the discourse surrounding these technologies has been very pessimistic,” said Chesney. “I wanted to challenge students to think of and demonstrate ways in which GenAI can instead be helpful to society.”

Over the course of the semester, students conceptualized, designed, and built software programs in small teams. Their creations address accessibility from various angles, taking on everything from real-time sign language translation and alt text generation to physical therapy and automated medication scheduling. Their finished products also took diverse forms, including mobile apps, browser extensions, and even a virtual reality program.

The student apps developed and presented at the EECS 495 showcase include the following:

ADHD Reader

Developed by Brandon Fox, Jacob Harwood, Sophie Johnecheck, and Shuyu Wu

For individuals with ADHD, reading long documents can be insurmountable, with symptoms such as hyperactivity and an inability to concentrate making it difficult to stay focused while reading. It can be also challenging for those with ADHD to comprehend and retain the information they have read.

To help alleviate these issues, this student team developed ADHD Reader, a document reading assistant for individuals with ADHD. This app breaks long documents into small segments that are easier to digest, and it gives readers encouragement and rewards for completing milestones at various points in their reading. ADHD Reader also prompts users to take frequent breaks, an essential coping strategy for readers with ADHD, enabling them to rest and restore their focus.

Other highlights of the ADHD Reader app include a helpful progress bar that shows readers how much of a given document they’ve read so far. The program also features low wait times, giving it a seamless, easy-to-use interface.

AI Reading Assistant

Developed by Rishiraj Chandra, Nathan Curdo, Sonika Amarish Potnis, and Daniel Sanchez

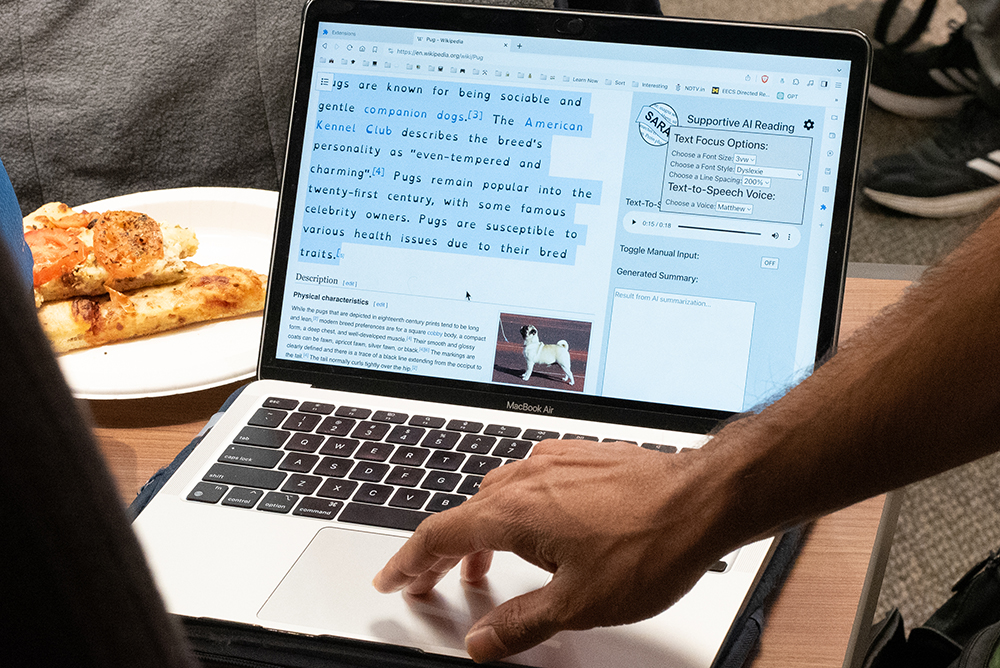

Capitalizing on this year’s theme of GenAI, this student team designed AI Reading Assistant, a browser extension intended to help people with dyslexia and other reading disorders better comprehend text online.

After installing the extension, users can simply click on the AI Reading Assistant button in their browser when accessing a text-heavy webpage. The program then uses generative AI to quickly summarize the highlighted text in an adjustable sidebar, as well as providing a text-to-speech option for those who prefer to hear the text aloud.

In all, AI Reading Assistant makes text-heavy pages much easier to digest and understand for individuals with reading disorders.

Altify

Developed by Blake Brdak, Qiyue Huang, Yanran Lin, and Marsh Reynolds

A vital component of accessibility in technology today is alt text, a text description of images and other graphic elements that screen readers can read aloud for users with visual impairments and low vision. Although the use of alt text is critical in ensuring an equitable online experience for these individuals, it is far from widespread. In fact, one 2019 study found that just 0.1 percent of tweets with images included alt text.

With this in mind, this student team developed Altify, a program that automatically generates alt text for pages that are missing it. The app parses a given webpage to determine where alt text may be needed and then uses generative AI to quickly create descriptions for images and other graphics.

Altify, in turn, makes for a much fuller and more accessible online experience for users who rely on screen readers.

CareTalk: The EMT App

Developed by Camden Do, Justin Li, Ashuman Madhukar, and Cullen Ye

Emergency medical services are crucial but not always accessible. Patients who are deaf or hard of hearing, nonverbal, or non-English-speaking may struggle to communicate their condition or needs to first responders in an emergency situation.

To alleviate this, these students developed CareTalk, a mobile app meant to facilitate patient-EMT communications. The app has three primary features: a medical profile, where patients can enter and store their medical history including allergies and preexisting conditions; a communication tool that allows patients and first responders to input and exchange information about the patient’s condition, pain level, etc.; and a health literacy aid that uses GenAI to provide educational information in response to patient questions.

CareTalk thus provides a critical and life-saving tool for patients who might otherwise struggle to communicate in emergency situations.

FrequenceEase

Developed by Ashley Wenqi Chen, Justin Fischer, Karina Wang, and Sarah Xu

Along with wisdom and experience, one hallmark of old age is reduced hearing. In fact, up to half of adults aged 75 or older experience some form of hearing loss, the most common form being high-frequency hearing loss. This type of hearing loss in particular can significantly hamper users’ ability to fully enjoy music, podcasts, videos, and other forms of media.

To remedy this, students have developed FrequenceEase, a mobile app that adjusts the frequency of audio to accommodate high-frequency hearing loss. FrequenceEase features a frequency tester that gauges a user’s ability to hear sounds of different frequencies. The app then adjusts the frequency of the phone’s audio according to the user’s results so that all sound falls within their range of hearing.

FrequenceEase thus allows users with high-frequency hearing loss to more fully enjoy and experience auditory media on their phones.

PictureBoard.ai

Developed by Oliver Gao, Blake Mischley, and Aidan Tucker

There are many learning and neurodevelopmental disorders that can make speech communication difficult for children. To assist nonverbal children as an alternative or supplement to speech, caregivers and educators often use picture boards. These are a collection of symbols, pictures, or photos that a child can point to or otherwise indicate in order to communicate with those around them.

To make this process easier, this student team has developed PictureBoard.ai, a program that uses GenAI to create custom picture boards tailored to a user’s needs.

Users simply input a prompt, from a general subject (e.g., animals) to a specific image (e.g., a frog in a purple necktie), and the program uses generative AI to create a collection of relevant images. This ability to quickly create picture boards customized to their needs broadens the scope of possible communication for nonverbal children.

SignSavvy

Developed by Steffi Bentley, Tamarakro Moni, Sarah Stec, and Fisher Wojtas

The Covid-19 pandemic saw a huge surge in video communications, as in-person meetings migrated online. And while this was a helpful development for many, the video format is not accessible for everyone, including deaf or hard-of-hearing individuals. While closed captioning has come a long way, and typing can be an option for some, American sign language (ASL) users are often left without a way to converse in the language they may feel most comfortable with.

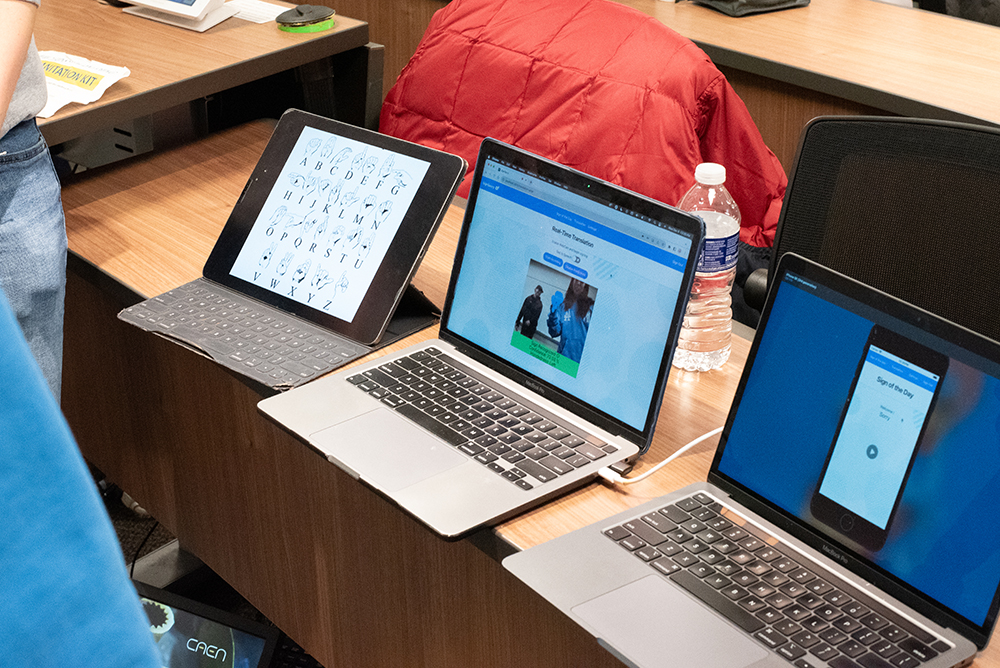

This student team sought to address this issue through SignSavvy, an app for real-time translation of ASL. The app uses computer vision via video to track hand movements and recognize gestures. It is then able to translate those gestures into signs and generate a text translation.

SignSavvy also has an educational component for ASL learners, including a sign of the day feature that scrapes the web for relevant and useful signs. In all, the app enables more streamlined and natural video communications for ASL users.

SmartDose

Developed by Apurva Desai, Michelle Li, Esha Maheshwari, and Nathan Schweikhart

For older adults and individuals with chronic illness, managing multiple medications at varying doses and times can be a serious challenge. It can also lead to nonadherence, compromising the efficacy of treatment.

In response, this student team has designed SmartDose, an end-to-end medication management tool that tracks and dispenses the necessary medications to patients when they need to take them. SmartDose includes a mobile app where users can input information about their medications, dosages, and schedule. This information is then used to program a physical device that sorts, stores, and dispenses the right medication at the right time.

SmartDose takes the guesswork out of medication management, with the aim of reducing nonadherence and improving treatment outcomes.

VR4PT

Developed by Yousif Askar, Ryan Kim, Joseph Manni, and Caleb Smith

Following injury or surgery, many patients are prescribed a physical therapy regimen to support their recovery. However, many of the exercises can be repetitive and tedious, leading some to abandon them.

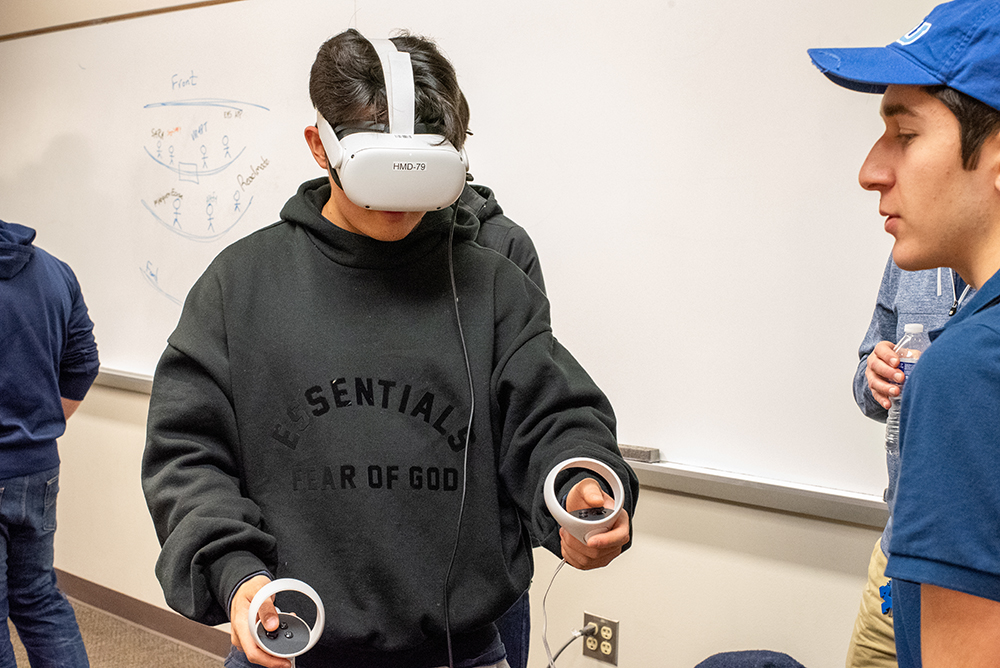

This student team aimed to make physical therapy more enjoyable and boost adherence through VR4PT, a virtual reality platform that turns PT exercises into a fun gaming experience. Wearing a VR headset, the user is able to select from various exercises and then uses handheld controllers to make movements when prompted. Targeted toward shoulder exercises, the in-game rep counter counts the number of correct movements made when the user’s arm reaches the target position.

Featuring smooth transitions and an intuitive user interface, VR4PT makes physical therapy fun and immersive, promoting adherence and better healing outcomes.

MENU

MENU