University of Michigan SEAGULL team wins Alexa Prize SimBot Challenge

A team of nine computer science and engineering and robotics researchers from the University of Michigan has won the Alexa Prize SimBot Challenge for their innovative design of the SEAGULL SimBot. In addition to claiming the $500,000 first-place prize, their work demonstrates the potential for interactive embodied agents to take on increasingly complex tasks.

The Amazon-sponsored SimBot Challenge tasked teams with developing virtual robots that can complete complicated, real-world tasks via continuous learning, demonstrated via an interactive puzzle game triggered by the prompt, “Alexa, play with robot.” The challenge included teams from elite institutions across the globe, bringing together the brightest minds in the field to explore and extend the possibilities of virtual assistants.

Prof. Joyce Chai, faculty advisor to the SEAGULL (Situated and Embodied Agents with the ability of GroUnded Language Learning) team and director of the U-M Situated Language and Embodied Dialogue (SLED) lab, emphasizes the importance of the SimBot Challenge in pushing the boundaries of embodied artificial intelligence. “Enabling embodied AI agents represents the pinnacle of AI, as it encompasses nearly every aspect of the field,” says Chai. “The Simbot Challenge provides an excellent platform for the team to explore this large joint space and identify unique research challenges and opportunities.”

SEAGULL team member, SLED lab member, and recent robotics graduate Nikhil Devraj agrees. “We want embodied AI agents out and about in the world, not stuck in the corners of our labs,” he says, “but there are myriad technical challenges we need to solve before accomplishing this. One such challenge is making them intelligently interact with real people. The Alexa Prize Simbot Challenge posed this exact problem in a simulated environment that real people could interact with via voice commands. This requires innovations in natural language understanding and generation, vision, and planning.”

The SEAGULL team’s SimBot stood out from the competition in these very ways, responding to user commands and performing tasks with ease. It shows an exceptional ability to comprehend complex requests and provide high-quality, appropriate responses, providing for a seamless and engaging user experience.

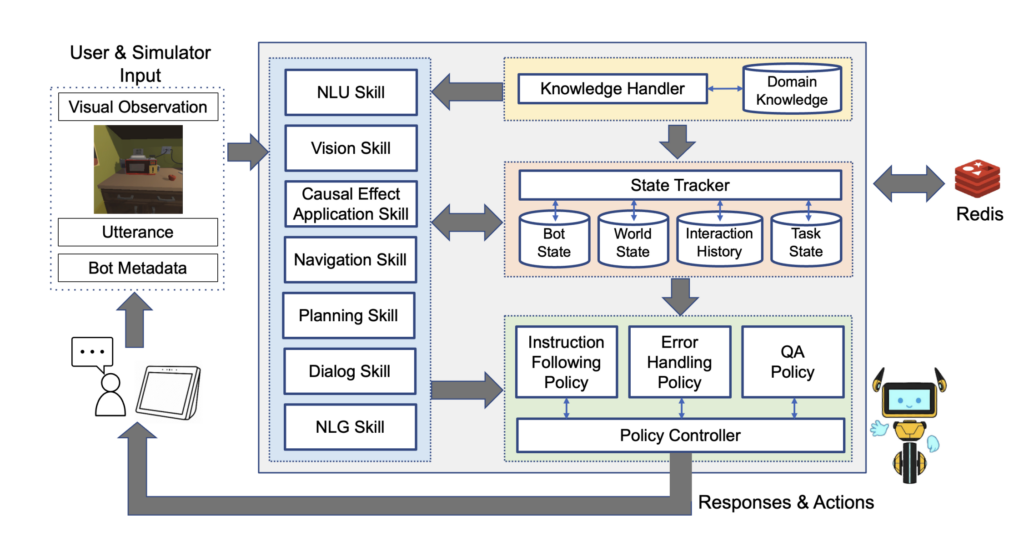

To accomplish this, the SEAGULL SimBot design uses a modular structure integrating both symbolic and neural components. This includes a natural language module that converts user requests into symbolic representations based on their semantics and intentions, as well as a neural vision module to detect various object features and their spatial relations. Their design also incorporates built-in tools to collect and analyze vision and language data in order to continuously improve the system’s performance.

“I believe the key advantage of our bot lies in its awareness,” says SEAGULL team leader and CSE PhD student Yichi Zhang. “This includes its situational awareness, which refers to the bot’s understanding of the current context. In SEAGULL, we design a comprehensive state tracking system that tracks a vast amount of information… This wealth of information informs every decision the bot makes, enabling it to consistently make rational decisions.”

The team’s bot also demonstrates social awareness, taking into account a user’s social cues. “An intelligent bot should not only follow the commands about what to do, but also respond appropriately to the user’s social intentions,” says Zhang. “For instance, users may praise the bot when it performs well, express frustration or disappointment when it falls short, or pose questions driven by curiosity about the game. In SEAGULL, we have taken a step further to facilitate such social interactions, a dimension that has remained largely unexplored.”

In addition to driving progress in embodied AI and the various areas it encompasses, the SimBot challenge also provided participants with unprecedented opportunities for collaboration. Jed Yang, SEAGULL team co-leader and CSE PhD student, emphasizes this. “As AI doctoral students, we often find ourselves operating independently or, at most, within small groups, focusing on projects with relatively brief timeframes,” he says. “In contrast, the current AI landscape demands that high-impact projects be undertaken by larger, coordinated teams over more extended periods. This is precisely what the SimBot challenge necessitated – a balanced mix of collaboration, complexity, and long-term commitment.”

This collaborative environment was key to the SEAGULL team’s success and empowered them to develop innovations that have pushed embodied AI technology forward in significant ways. Through their groundbreaking SimBot design, the team has introduced techniques that will make virtual assistants more knowledgeable, responsive, and capable of performing complex real-world tasks.

For Zhang, however, the team’s work to make their bot into a widely available and fully functioning tool is only beginning. “Our goal is to build more general and robust embodied AI systems that can be used by a wider audience,” he says. “We plan to iterate on our models, by addressing the limitations we identified during the challenge. We also plan to integrate our models with physical robots in real-world settings to test their transferability and generalizability.”

MENU

MENU