Five papers by CSE researchers to be presented at POPL 2024

Five papers by researchers affiliated with CSE have been accepted for presentation at the 2024 Symposium on Principles of Programming Languages (POPL), taking place January 14-20 in London.

Sponsored by the ACM Special Interest Group on Programming Languages (SIGPLAN), POPL is a premier international forum for researchers and practitioners specializing in programming languages and systems. A top conference in this area, POPL aims to encourage and promote research that makes “principled, enduring contributions” to the design, understanding, and application of programming languages.

The papers to be presented by CSE authors at POPL contribute several new findings and innovations related to programming languages, including a novel error localization and recovery method for type systems, a new algorithm for searching programs with local variables, proposed techniques for testing specifications in the Dafny programming language, and more.

The papers being presented are as follows, with the names of authors affiliated with CSE in bold:

POPL Main Conference

“Total Type Error Localization and Recovery with Holes” – Distinguished Paper Award

Eric Zhao, Raef Maroof, Anand Dukkipati, Andrew Blinn, Zhiyi Pan, Cyrus Omar

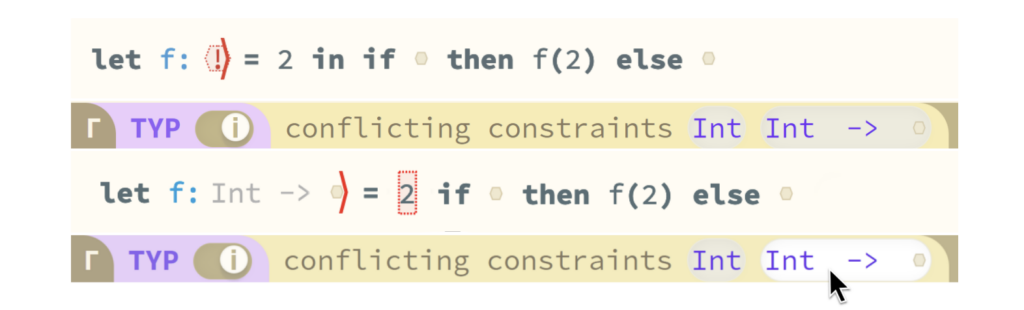

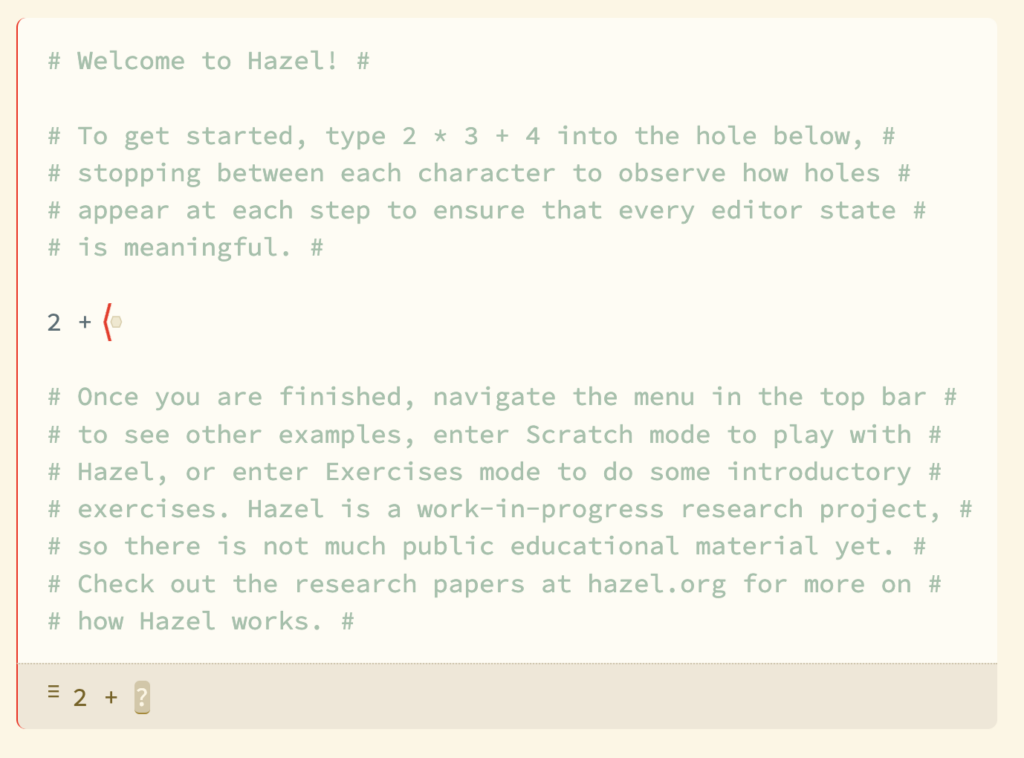

Abstract: Type systems typically only define the conditions under which an expression is well-typed, leaving ill-typed expressions formally meaningless. This approach is insufficient as the basis for language servers driving modern programming environments, which are expected to recover from simultaneously localized errors and continue to provide a variety of downstream semantic services. This paper addresses this problem, contributing the first comprehensive formal account of total type error localization and recovery: the marked lambda calculus. In particular, we define a gradual type system for expressions with marked errors, which operate as non-empty holes, together with a total procedure for marking arbitrary unmarked expressions. We mechanize the metatheory of the marked lambda calculus in Agda and implement it, scaled up, as the new basis for Hazel, a full-scale live functional programming environment with, uniquely, no meaningless editor states.

The marked lambda calculus is bidirectionally typed, so localization decisions are systematically predictable based on a local flow of typing information. Constraint-based type inference can bring more distant information to bear in discovering inconsistencies but this notoriously complicates error localization. We approach this problem by deploying constraint solving as a type-hole-filling layer atop this gradual bidirectionally typed core. Errors arising from inconsistent unification constraints are localized exclusively to type and expression holes, i.e., the system identifies unfillable holes using a system of traced provenances, rather than localized in an ad hoc manner to particular expressions. The user can then interactively shift these errors to particular downstream expressions by selecting from suggested partially consistent type hole fillings, which returns control back to the bidirectional system. We implement this type hole inference system in Hazel.

“Efficient Bottom-Up Synthesis for Programs with Local Variables”

Xiang Li, Xiangyu Zhou, Rui Dong, Yihong Zhang, Xinyu Wang

Abstract: We propose a new synthesis algorithm that can efficiently search programs with local variables (e.g., those introduced by lambdas). Prior bottom-up synthesis algorithms are not able to evaluate programs with free local variables, and therefore cannot effectively reduce the search space of such programs (e.g., using standard observational equivalence reduction techniques), making synthesis slow. Our algorithm can reduce the space of programs with local variables. The key idea, dubbed lifted interpretation, is to lift up the program interpretation process, from evaluating one program at a time to simultaneously evaluating all programs from a grammar. Lifted interpretation provides a mechanism to systematically enumerate all binding contexts for local variables, thereby enabling us to evaluate and reduce the space of programs with local variables. Our ideas are instantiated in the domain of web automation. The resulting tool, Arborist, can automate a significantly broader range of challenging tasks more efficiently than state-of-the-art techniques including WebRobot and Helena.

Programming for the Planet (PROPL) Workshop

“Toward a Live, Rich, Composable, and Collaborative Planetary Compute Engine”

Alexander Bandukwala, Andrew Blinn, Cyrus Omar

Abstract: Addressing the climate crisis poses many computing challenges for a variety of stakeholders, many of whom are not CS experts but rather scientists, policymakers, journalists, and members of the public. In order to solve these challenges there needs to be a large-scale collaborative compute engine that is live, rich, composable, and collaborative. Specifically, we present Planet Hazel, a vision of the Hazel programming environment geared toward planetary computing.

Dafny Workshop

“Testing Specifications In Dafny”

Eli Goldweber, Weixin Yu, Armin Vakil, Manos Kapritsos

Abstract: The guarantees of formally verified systems are only as strong as their trusted specifications. As observed by previous studies, bugs in formal specifications could invalidate the assurances that proofs provide. These types of specification bugs are difficult to debug and have been found to exist in practice.

Beyond manual inspection, there is currently no structured way to gain confidence that a specification is correct. Due to the trusted role specifications take, it would be impossible to prove a specification correct. We take a compromised approach, of testing specifications to help increase trust in specification correctness; who watches the watchers?

In this paper, we propose automatic and manual techniques to enable the ability to test specifications in Dafny. Drawing inspiration from classical software testing practices we introduce IronSpec, a framework for testing specifications. IronSpec enables automatic specification mutation testing to identify cases of specification weakness. This automation is complemented by a unit testing methodology for writing Spec-Testing Proofs (STPs) for a given specification. We evaluate IronSpec on nine specifications, including three specifications of open-source verified systems. Our initial results show that IronSpec is effective at flagging discrepancies between the original specification and the intent of the test writer, and has led to the discovery of specification bugs in each of the three open-source verified systems.

“Domesticating Automation”

Pranav Srinivasan, Oded Padon, Jon Howell, Andrea Lattuada

Abstract: Automated theorem proving throws compute at a problem to save effort for humans. It promises to enable scaling of verification-based development to large-scale practical systems. To do so, the user experience must remain snappy at scale, which means understanding how to prevent automation from slowing down at scale.

We propose a framework for spending automation budget and argue that large scale projects will ultimately require coarse-grained module automation boundaries. We report on our experience trying to use tooling on a large verified research system project to identify candidate boundaries: we found one candidate and addressed it with modest success, but the experience suggests the tools and approach have much room to improve.

MENU

MENU