Improving the accuracy and applicability of large language models, like ChatGPT

From helping researchers identify promising solutions to teaching children critical thinking skills, generative models like ChapGPT are revolutionizing how the world uses and understands data—but there are limitations.

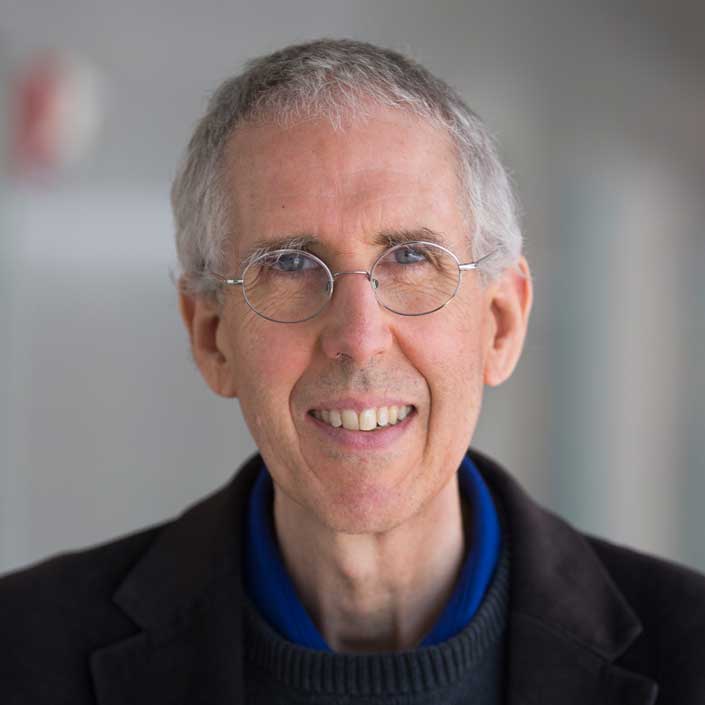

“If you train an image classifier on cats, it’s probably not going to be very good at classifying dogs,” said Alfred Hero, the John H. Holland Distinguished University Professor of EECS and R. Jamison and Betty Williams Professor of Engineering.

To solve this problem, Hero led a team that developed a new method of recalibrating pre-trained neural networks for different domains, which greatly improves their applicability and reduces the risk of AI hallucinations.

“With our algorithm, practitioners can use a lot more data, even when it’s collected from different types of modalities under different conditions,” Hero said. “The resulting predictive model will be more accurate and reliable.”

With our algorithm … The resulting predictive model will be more accurate and reliable.

Prof. Alfred Hero

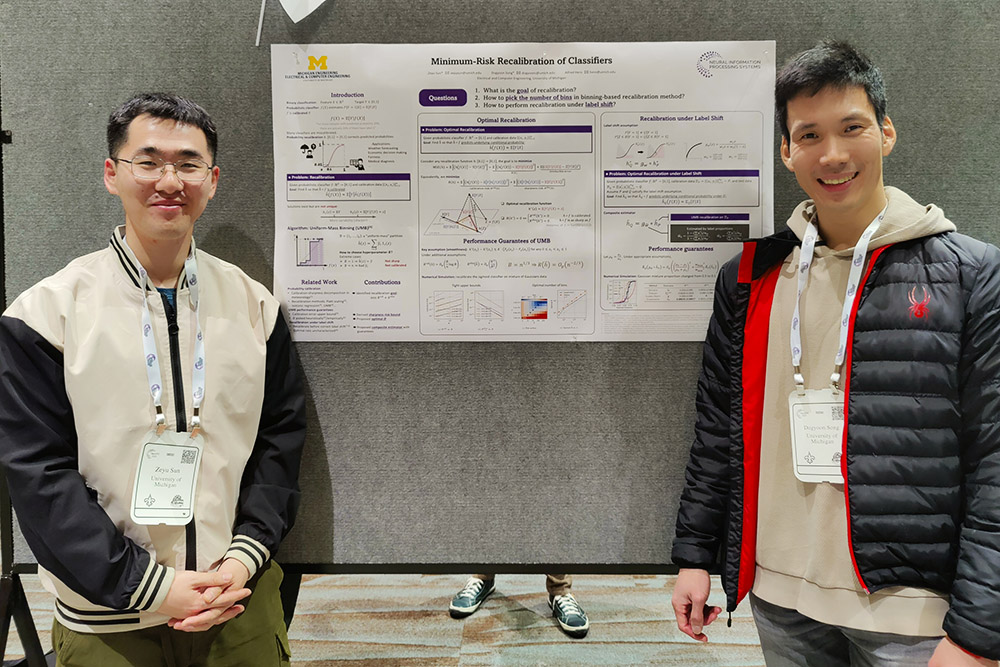

The research, “Minimum-Risk Recalibration of Classifiers,” co-authored by ECE PhD student Zeyu Sun and ECE Research Fellow Dogyoon Song, was selected as a spotlight paper at the 2023 NeurIPS conference held last week in New Orleans. It is one of two U-M ECE research projects celebrated at the prestigious conference – Prof. Samet Oymak’s work on how chatbots use their own machine learning algorithms to decide what to pay attention to in a conversation was selected as a NeurIPS 2023 spotlight poster.

Neural networks, which include large language models (LLM) like ChatGPT, analyze data by classifying complex patterns in images, text, sounds, and more to generate accurate insights and predictions. However, these networks can also generate false insights and predictions, which are known as “AI hallucinations.” AI hallucinations are more likely when a model that has been trained on a specific set of data is then used to classify a similar—but new—set of data.

“The issue is you don’t always have the ability to retrain a model on your own unique data set,” Hero said. “It is more helpful to use a very sophisticated model that has already been trained on a similar data set, but then you need to recalibrate it to your dataset.”

The team’s method, “minimum risk recalibration,” focuses on probabilistic classifiers, which is when an LLM generates a prediction and then describes the probability that the prediction is correct. The team quantified the trade-off between how accurate the model’s predictions are overall (its average calibration) with how accurate the model is for an individual prediction (its sharpness). They then derived an optimal algorithm to achieve the best possible calibration for applying pre-trained models to new datasets.

“Whatever your feelings are on AI, the reality is that it’s here to stay,” Hero said. “Wouldn’t you rather have AI that doesn’t make errors and is fairer in making decisions that could impact your life?”

Wouldn’t you rather have AI that doesn’t make errors and is fairer in making decisions that could impact your life?

Prof. Alfred Hero

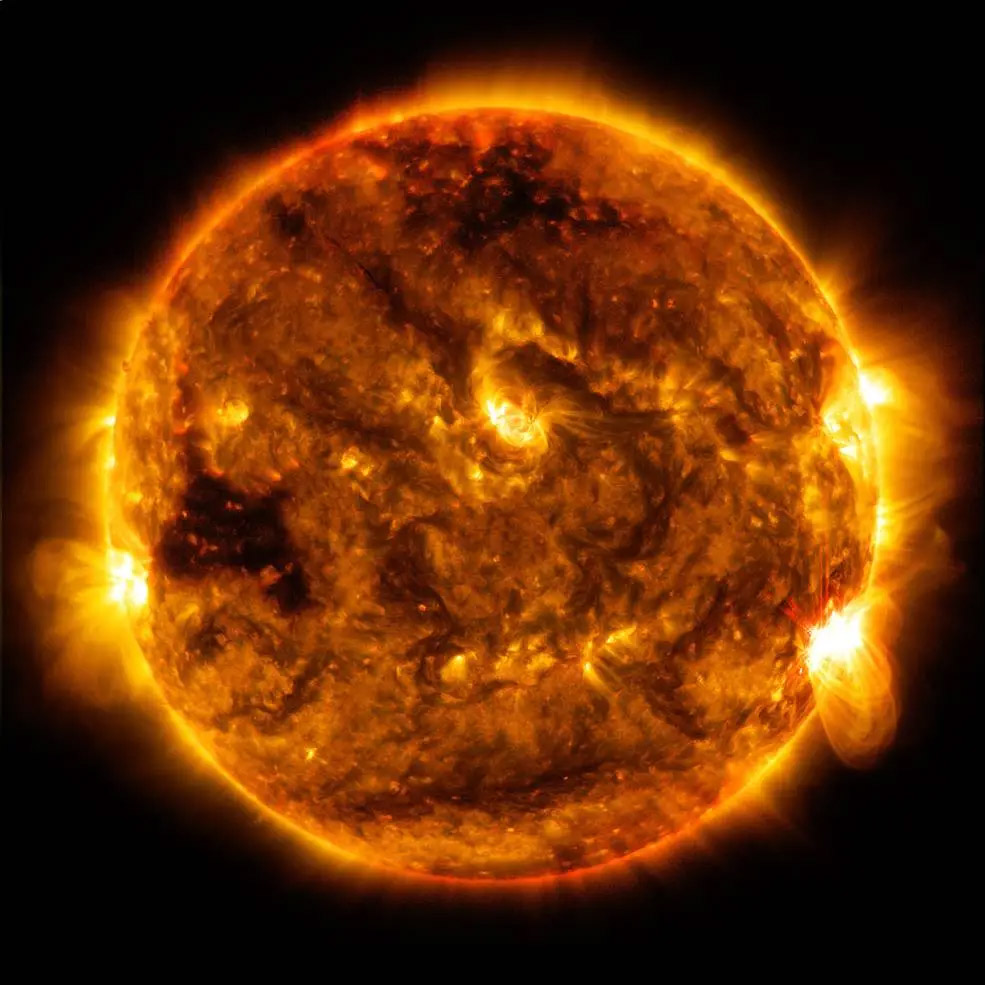

One specific scenario Hero’s team considered was predicting the likelihood of solar flares, which are large eruptions of electromagnetic radiation from the Sun that can disrupt satellite operations, radio communications, and power grids.

In a previous research collaboration with the department of Climate and Space Sciences and Engineering, Hero’s team used data from two different generations of satellites to train a solar flare prediction model. Before being retired several years ago, the previous generation satellite had collected substantial data from years of tracking sunspots and their correlation with sun flare activity. The new satellite had collected less data, but the measurements were more accurate and the labels had evolved to better describe recent trends in sunspot cycles and intensities.

Hero’s team applied their experience from that project to recalibrate classifiers that were trained on old data—such as the previous satellite—by using a smaller amount of data from a different modality—like the new satellite.

“By using this algorithm to recalibrate a pre-trained model, the result is much better than if you were to simply train a model exclusively on your unique, small dataset,” Hero said. “It’s a significant, mathematically proven boost.”

For this project, the team focused on binary classification (e.g., “Is there a high likelihood for a solar flare to occur soon? Yes or no”), but they are next looking to apply the work to multi-classification problems (“In what intensity level class will this solar flare be: class B, C, M or X?”).

MENU

MENU