Researchers Employ Unsupervised Funniness Detection in the New Yorker Cartoon Caption Contest

In their study, the researchers took a computational approach to studying the contest to gain insights into what differentiates funny captions from the rest.

Enlarge

Enlarge

How do we know what’s funny, and can machine learning and big data techniques be used to identify the essence of humor?

Prof. Dragomir Radev and his former student and alumnus Rahul Jha, together with colleagues from Yahoo! Labs and Columbia University, recently teamed up with New Yorker Cartoon Editor Bob Mankoff to take a computational approach to understanding humor. Their paper, posted on arxiv.org and entitled “Humor in Collective Discourse: Unsupervised Funniness Detection in the New Yorker Cartoon Caption Contest,” describes their findings.

The New Yorker publishes a weekly Cartoon Caption Contest has been running for more than 10 years. Each week, the editors post a cartoon and ask readers to come up with a funny caption for it. They pick the top three submitted captions and ask the readers to pick the weekly winner. The contest has become a cultural phenomenon and has generated a lot of discussion as to what makes a cartoon funny.

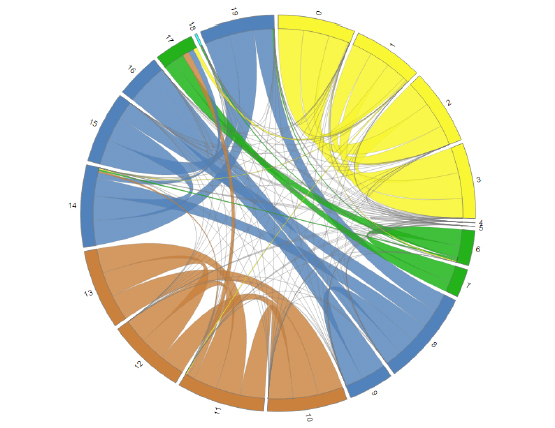

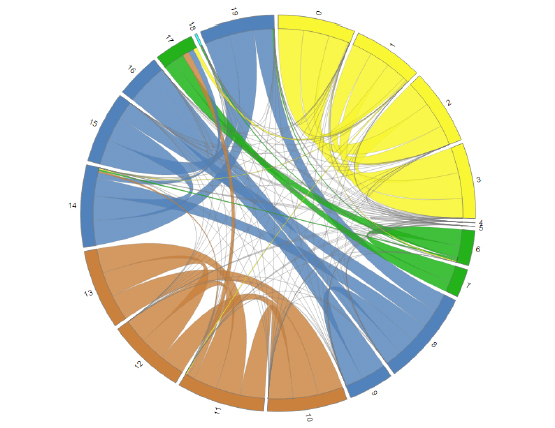

In their study, the researchers took a computational approach to studying the contest to gain insights into what differentiates funny captions from the rest. They developed a set of unsupervised methods for ranking captions based on features such as originality, centrality, sentiment, concreteness, grammatically, human-centeredness, etc. They used each of these methods to independently rank all captions from the New Yorker’s corpus of cartoons and selected the top captions for each method. Then, they performed Amazon Mechanical Turk experiments in which they asked Turkers to judge which of the selected captions is funnier.

The researchers were able to show that negative sentiment, human-centeredness, and lexical centrality most strongly match the funniest captions, followed by positive sentiment. These results are useful for understanding humor and also in the design of more engaging conversational agents in text and multimodal (vision+text) systems.

As part of this work, a large set of cartoons and captions is being made available to the community so that other researchers may experiment further.

MENU

MENU